TetrisAI.jl

Table of Contents

For more detailed informations, read the report for this project (only in French) or the poster

Tetris is a classic video game that had a significant impact cuturally from it’s first release in 1989 on the NES with over 1.5 millions copies sold. It is a game in which tetrominoes fall from the ceiling one by one and the player must prevent the tetrominoes in the grid from climbing to the top by completing full lines of blocks in which case the complete lines disappear and all the blocks above shift one position down. The game becomes more and more complex as the player progresses through the stages of the game because the tetrominoes fall down faster. You can read more on the game here for more details.

Tetris has been proven to be NP-Complete which means that we think it’s impossible to resolve the game of Tetris with a polynomial time constraint and this makes it a great non-trivial environment to develop algorithms for. In the litterature, Tetris is extremely hard to even approximate. The goal would be to try and create the best possible agent so that it can hopefully keep playing Tetris for eternity. Many techniques can be explored when developping an agent for such an environment, but we concentrated our efforts on trying to create the perfect agent using Reinforcement Learning.

Setting #

This project is the subject of a project course at the University of Sherbrooke which we chose to explore at in our own will. We were a team of 3 people: Léo Chartrand, Olivier Thiffault and I. This project was done in two phases. The first phase of the project consisted in building the Tetris environment in such a way that an agent can interact easily with it and implement simple algorithms to rapidly test the framework. This was mostly done by Leo Chartrand and Guillaume Cléroux. In the second phase of the project, we picked up the foundations that they laid off for us in the second phase of the project to try and outperform the first algorithms that were implemented in the first iteration of the project. We wanted to refine a not-so-perfect implementation of DQN and we wanted to find another algorithm that can handle more complex situations. Some details had to be refined in the first implementation of the framework, but that’s essentially what we wanted to achieve.

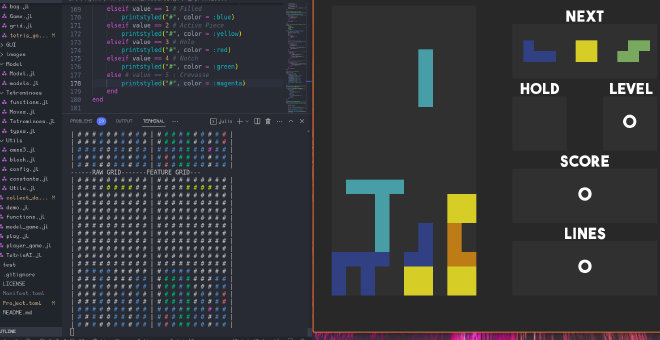

Every single line of code in this project was written in Julia, a great programming language that has fast native matrix manipulations which makes it very practical for machine learning developments since it also supports several common machine learning algorithms. Another nice feature about this project is that we open sourced the code to the Julia community for anyone else to pick this project up and continue improving the results that we’ve achieved below.

Sparse Rewards #

If you look around the litterature, there’s been already many well performing Tetris agents that can probably outperform most humans at the game. However, in most solutions from the litterature, there is almost always a simplification to the original Tetris environment. For example, the most common simplification is that the agent might not have to perform all the actions (UP, RIGHT, RIGHT, RIGHT, DOWN, …) to control the falling piece, but instead just places the piece directly on one of the valid positions on the board. This simplification can enable the agent to evaluate the goodness of the action it just made by placing the piece and thus getting a direct feedback from its actions. The classical setting of the Tetris game would be that the agent has to perform all the actions so that it can have the same parameters to play the game like if it was a human. This is the approach we took in this project.

The reason that simplifications were made in the litterature is because of a particularily challenging phenomenon in reinforcement learning called sparse rewards. Without simplifications, the agent really has some problems evaluating the goodness of its actions. Let’s say that the agent decides that a piece should be placed at the position in the image. The agent would have to execute a long sequence of actions so that the tetromino can reach the desired position. The problem resides in the long sequence of actions because each action taken during that sequence cannot receive direct feedback (also called reward) to whether that action was good or not because the tetromino still is falling. When the piece gets into the desired position, it’s hard to match which actions within that list lead to that reward. The agent could’ve spun the piece to the right 1000 times before it reached it’s position which is probably useless while only 2 other actions actually lead to the piece getting that reward.

Guidance through sparse rewards #

Feature engineering #

Léo Chartrand implemented a feature extraction module in hope to better guide the agent with the goodness of its actions. This module enables the agent to have some additionnal information on some specific caracteristics of the current environment. This way, we create a feature grid which is a replicate of the game grid with additionnal information encoded in it. We identify each cell sequentially by first considering the entire grid as if it was full. Using a recursive function, we then perform a diffusion filling to identify all the empty cells accessible from the top of the grid in a straight line from the original grid. The cells that aren’t marked as empty on the feature grid, but actually are empty in the game grid, be mark them as holes (which are not desired in most Tetris games). Then, each empty cell that has an existing filled cell right above it are marked as notches. Finally, the other empty cells that have at least two other empty cells above them and are surrounded by filled cells are marked as crevasses.

This information can then be provided as an observation state vector to the agent. Before directly giving this vector to the agent, we also provided a way to encode the information in a more concise way using a convolutional neural network (CNN) that can also be chained with other deep learning techniques.

Reward shaping #

Following the feature extraction module, we can use some parts of this module to shape the rewards to generate a feedback for the agent. Each tick of the game, a score is calculated and this score is remembered for the next frame/tick. We compute this score using the following formula:

\( score = (-0.510066 \times height) + (0.760666 \times lines) + (-0.35663 \times nbHoles) + (-0.184483 \times bumps) \)

The lines are the number of completed lines and the bumps is the average bumpiness in the grid which is a squared average of the different in height between adjacent columns. With this score, we compute the reward based on the variation of this score between two ticks. If the score stays the same, then no reward is given to the agent. Please note that in this equation, we punish the agent for a height, the number of holes or the bumpiness in the grid and we reward it for completing lines. The thing is, we consider that the “real” rewards in this game is the actual score of the game and that this reward shaping would only be to guide the agent into obtaining a few first completed lines. This way, we introduce a constant \(\Omega\) (starting at \(\Omega = 0\)) that allows us to give more and more weight to the completed lines the more we complete lines. Each time one or more line is completed in a tick, we increase this constant by 0.1, thus giving more weight to the future completed lines.

\( reward = (1 - \Omega) \times (score_{t-1} - score_t) + \Omega \times (lignes)^2 \)

So, after 10 ticks with some lines completed, we do not give intermediate rewards and we only favor the game score increase. We square the number of lines, because we want to favor the behavior that the agent completes multiple lines at once, which is best for the game score.

Dataset #

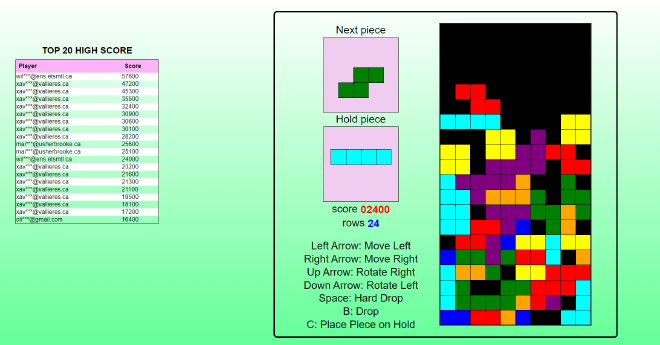

As some great results in the Tetris environment were also achieved, in the litterature, using supervised learning, we had the idea to incorporate that technique into our arsenal of tools to train the perfect again. We wanted to use the immitation learning technique which requires lots of expert data to actually learn some patterns of how the human players score points on this game. The Julia interface that we originally had was a bit cumbersome to deploy to the wide public within the allocated time. Instead, adapted another Tetris game to the rules we’ve set for our own game and implemented Tetris in javascript, this way it would be easily accessible to anyone to play. After each game, if the score was over a certain amount of lines cleared, the game’s data would be sent to a S3 bucket in the cloud so we could then download the data whenever we needed to.

To incite the players to play, which was mostly our student’s cohort and some friend, we decided to throw a contest so that the best scorer would obtain a $40 gift card for the liquor store. This allowed us to collect around 600 good games that can be used for supervised learning. The pretraining still remains to be done properly, but the dataset that we processed to remove some bugs and to only keep the best games is available for download to publicly.

Results #

The two main learning algorithms that we’ve implemented are Deep Q Network (DQN) and Proximal Policy Optimization. Those are common and often considered state of the art algorithms in reinforcement learning since they already demonstrated great performance in a handful of scenarios. Although we already have DQN half-implemented with only one neural network, we had to learn how PPO worked and then implement it in Julia.

If you need some background on the following algorithms, I suggest the following articles to better understand DQN and PPO. You can also checkout our report which some quick introduction into the subject, but it’s only available in French.

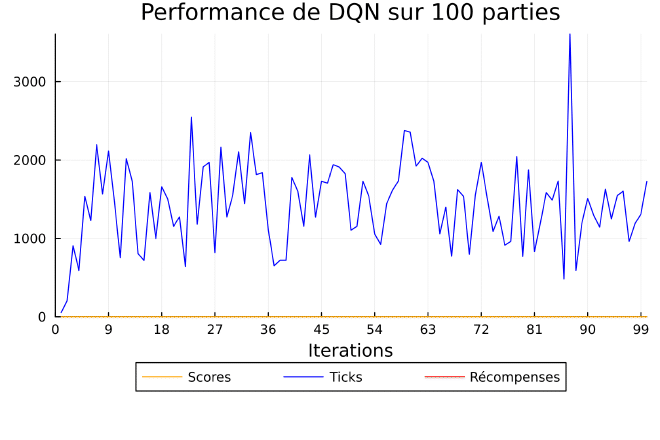

DQN #

We first trained DQN with and without reward shaping and with feature extraction. We can quickly notice that the score of both agents stays at zero the entire time, which signals that the agent is still incapable of completing a single line in the environment. Even though the training was only for 100 episodes, we can also notice that the algorithm seems to encounter a plateau at between 1000 and 2000 ticks for the majority of the training both with and without reward shaping. It’s difficult to see on the plots, but the rewards given to the agent without reward shaping was always zero since the agent can’t complete a single line and the reward was always between -10 and 0 for the agent with reward shaping. We infer that the reward shaping didn’t have a significance impact on the learning process, which was a little unexpected since it helps to give feedback to the agent on the actions taken.

| DQN was trained for 100 episodes. | |

|---|---|

|  |

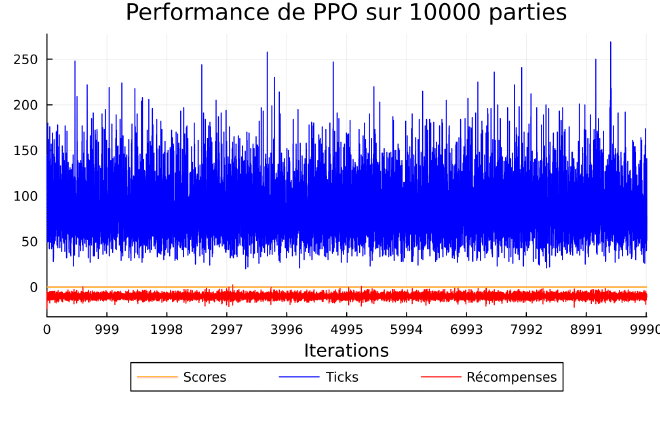

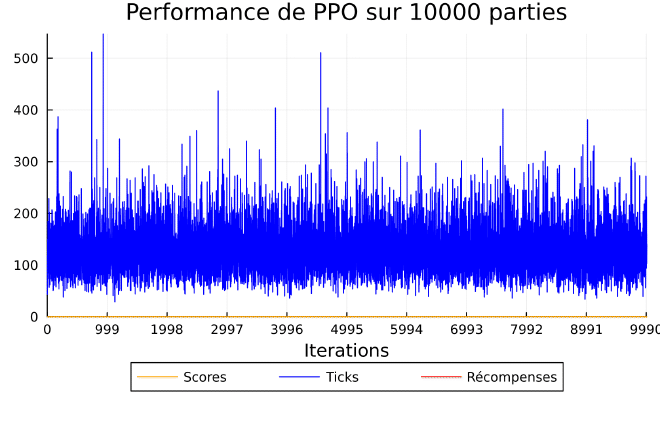

PPO #

PPO agents were also trained on configurations with and without reward shaping while having also the feature extraction module enabled. The reward shaping doesn’t really contribute either to the agent’s learning either for this experiment. There’s also a plateau that has been reached between only 100 and 200 ticks, which is way lower than what DQN has been able to achieve. The score stays at zero and the values for the shaped rewards given also are between -10 and 0 while the non-shaped rewards stall at zero.

| PPO was trained for 10k epidsodes. | |

|---|---|

|  |

Conclusion #

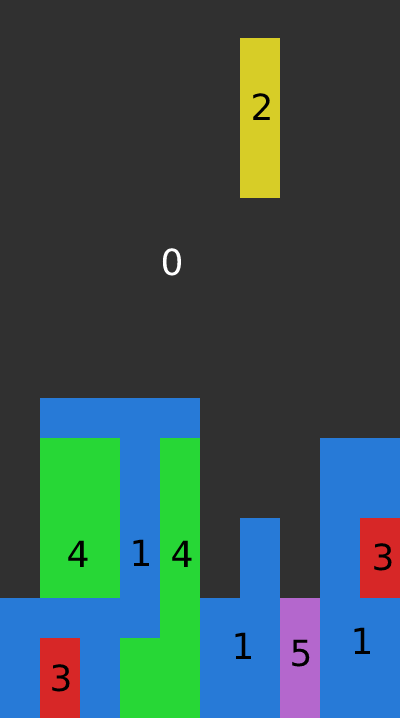

Definetly, we were expecting better results from our DQN and PPO experience. First of all, we were expecting PPO to at least out perform DQN since it has shown itself very useful in many complex scenarios such as Atari games and it’s an algorithm that usually doesn’t require a lot of parameter tuning. At the very least, as expected, PPO was a lot faster to train which enabled us to train it for 10 000 steps during around four hours. We say that PPO performed worse than DQN based on the number of ticks in the graphs, but this is not necessarily correct. If we analyse and compare both demonstrations of DQN and PPO respectively, we can see that PPO just drops the pieces very fast while DQN holds a piece or lets it fall which increases the time before the structure gets to the top of the grid. However, both models execute similar strategies when you look at them in action. In the following figure, we can see that DQN stacks the tetrominoes in a tower generally in the middle that quickly gets to the top and it doesn’t seem like the agent knows very much what he’s doing.

It’s important to note that we are very limited on computing power compared to most research laboratories which slows our training by a good margin. With better hardware and more financial resources, we could’ve let the agent train for days and maybe we could’ve obtained better results. It’s just a little bit hard to judge whether there’s a bug in our implementation or that we just need to keep training. It’s also a very complex challenge for a reinforcement learning agent to perform well in such an environment since there’s over \( 2^200 \) possible board configurations which makes it very complex to learn anything.

Next steps #

As noted at the top of this article, we made this project open source so anyone can use and take the project further either for their own research exploration, for another project course or just for fun. The project is usable pretty easily, but there’s still some work to be done as to deploy the package so that it’s available on the Julia package index. There’s some great documentation already set, but some finishing touches might be useful to help a new user to use this platform.

Also, for most of the best performing algorithms in the litterature such as AlphaGo for example, there is still some heuristics used in many scenarios to help guide the agent in it’s learning. Maybe implementing something like a Monte Carlo Tree Search (MCTS) might increase the performances and allow the agent to learn and actually complete some lines so that it can at least reach it’s first score point.

Due to a lack of time, we didn’t have the time to properly pre-train an agent with the expert dataset detailed above. That would be a great idea to further improve the agent’s performances since it can already give a strategy to follow based on the games that we’ve had that score well over 50 lines. Since we made this dataset public, it can be put to use directly.

Finally, a grid search for the best combinaison of features for a model wouldn’t hurt anyone and most surely only increase the odds of the model performing better. It just happens to take a very long time to do such a search and we were out of time and resources to do so.