Upper Bound 2025 Notes

May 21st

Investigating the properties of neural network representations in reinforcement learning

- Presenter: Adam White

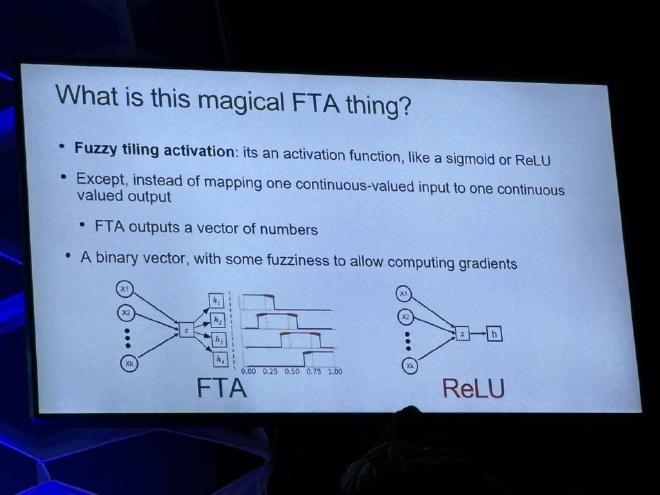

- Summary: The idea of the talk is that if an agent successfully learns a good state representation, that same state representation should be useful to learn a new task/goal within that environment. Adam shows that learning on a task in a simple grid-world environment on a specific goal and transferring the learning to another similar to dissimilar goal works well in a simple 2D grid-world environment with DQN. In the paper, the different tasks are formulated by a different goal position within the grid. However, the main takeaway is that by normally using ReLU activation functions (the most popular method), the transfer learning actually decreases performance compared to training from scratch to attain that new goal. The key finding was that it is more convenient to use the FTA activation function out of the box for transfer learning tasks.

- Main paper of the talk: https://www.sciencedirect.com/science/article/pii/S0004370224000365

- PS: John Carmack was there! :)

- Bonus paper, not related (Fine-tuning without performance degradation): https://arxiv.org/pdf/2505.00913

- Image briefly comparing ReLU and FTA:

Streaming deep reinforcement learning

- Presenter: Rupam Mahmood

- Summary: Streaming deep reinforcement learning is effectively the process of removing the need of using a replay buffer and doing batch updates. Originally, updating on every single data sample collected lead to instability and massive performance drops since the update steps can sometimes over or under-shoot. This talk presents that they were able to achieve reasonable performance using effectively no replay-buffer, no batch updates and no target networks. They briefly present a family of streaming RL algorithms (including Streaming AC, a stripped-down version of SAC) that works with a single set of hyperparameters. There is a few key factors that allow the streaming RL methods to work, but the main deciding factor seemed to be their new optimizer that helps with the overshooting updates.

- Main paper(s):

- (updated version with family of parameters) https://proceedings.neurips.cc/paper_files/paper/2024/file/019ef89617d539b15ed610ce8d1b76e1-Paper-Conference.pdf

- (first paper) https://arxiv.org/pdf/2410.14606

Keen Technologies research development

- Presenter: John Carmack

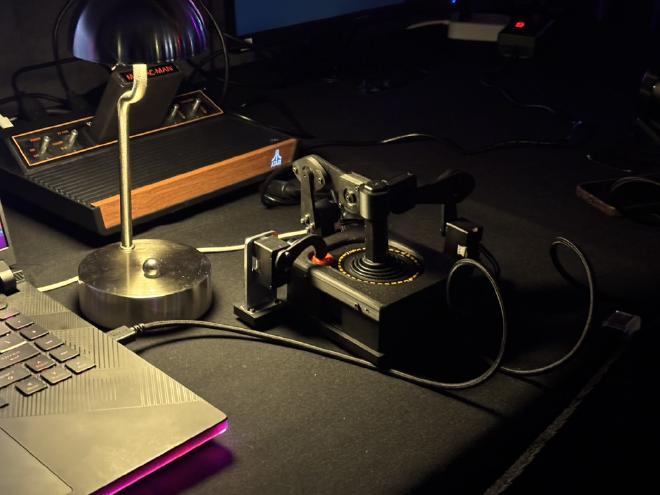

- Summary: John and his research team seem to be concentrated on tackling the Atari problem with a more real-world human-based experience: meaning that the agent has the same inputs and actionnable items as a human more or less (e.g. physically moving the joystick during the training and looking at the image screen with a camera). There is a demo of that case during the conference. He also argues that even thought there are a lot of very good performing models for the Atari benchmark, those results are hardly applicable to a real-world scenario in which the agent has to physically interact and watch the screen. A good metric he’s trying to push for is that it shouldn’t take that many episodes in order to learn something useful from the environment. Also, if an agent is trained on a second game after being trained on a first game, the agent very quickly forgets all about the first game it learned due to the update steps modifying all weights of the agent. He proposes that an Atari benchmark more aligned towards transfer learning between games should be created (such as training the agent through a cycle of games and circling back on the past learned games in order to evaluate the performance).

- Beside the stage, there was a demo of a real-time learning agent that interacts physically with a joystick and has the same input as a human would (the following image shows the joystick setup that is used to control the Atari console, and a camera is fixed right in front of the screen):

Pioneering Deep Learning Acceleration (NVIDIA sponsored content)

- Presenter: Kateryna Nekhomiazh (Solutions Architect, NVIDIA AI)

- Summary: NVIDIA Cosmos and Omniverse is a set of tools to generate/augment datasets and “improve” the training workflow. For example, you can have a base image input of a few different modalities (e.g. LIDAR scan output, segmented image) and generate multiple different videos based on that input image. With a LIDAR scan of a house, you can generate a video of the house being with several different textures, lighting, etc. You can also generate edge cases scenarios (e.g. a child suddenly running down the street in autonomous driving data) in video data.

- Image briefly introducing Cosmos:

May 22nd

Finding shared decodable concepts (and their negations) in the human brain

- Presenter: Alona Fyshe

- Summary: The work presented is focused on analyzing “flat-maps” of the brain (flattened surface area of the brain). They propose a variation of the DBScan clustering algorithm so that they are able to find clusters of concepts that activate some regions of the brain across different subjects (a high-density region is not a cluster if it’s not a region also found in other subjects). They used a dataset of 8 subjects that were acting upon seeing different images of the MSCOCO dataset.

- Paper(s): https://arxiv.org/pdf/2405.17663

- Viewer: https://fyshelab.github.io/brain-viewer/?

Advancing Visual Anomaly Detection: From one-class to zero-shot and multi-class innovations.

- Presenter: Xingyu Li

- Summary: (Didn’t understand much, details are in the papers, but…) The talk presents some efforts done on anomaly detection from one-class, multi-class to zero-shot anomaly detection. The idea is that it’s possible to achieve relatively high performance for single-class anomaly detection, but just adding a single other class (for multi-class prediction scenarios) the performance drops a lot. The presenter presents some methods to extend the great performance to multi-class scenarios, but there are theoretically an infinite number of classes, thus leading them to develop zero-shot classification methods, where a single model can be trained together with using test-time scaling to achieve much higher accuracy in anomaly detection than what was originally possible.

- Paper(s):

- https://openaccess.thecvf.com/content/CVPR2024W/VAND/papers/Bao_BMAD_Benchmarks_for_Medical_Anomaly_Detection_CVPRW_2024_paper.pdf

- https://arxiv.org/pdf/2402.17091

- https://arxiv.org/pdf/2405.04782

- https://arxiv.org/pdf/2303.10342

- https://arxiv.org/pdf/2201.10703

- Probably other papers that I might’ve missed

- Image kinda summarizing the general ideas she presented:

Transformer Reinforcement Learning workshop (Fine-tuning LLMs)

- Tutorial (make sure to use T4 GPUs): https://github.com/huggingface/trl-tuto

- Just learned about gradient checkpointing to trade off compute for memory: https://docs.pytorch.org/docs/stable/checkpoint.html. The rest of the workshop was basically just running colab notebooks using huggingface’s tools (SFTTrainer, GRPOTrainer, etc.)